For my entire life my eyesight was so bad that I couldn't even read the license plate of the car in front of me. It seemed like the entire world was in on a cruel joke to make every sign just a bit too small. But last week I had my corneas cut open by a computerized femtosecond laser and reshaped by an excelsior laser. Today, I can look up at the moon and literally see the Sea of Tranqulity with near-perfect visual acuity. If LASIK isn't a testament to the feasibility of engineering the human body, then I don't know what is.

That got me thinking – what other aspect of being human might be a good target for augmentation? It might be useful to just look at what people are doing today which improve deficits in their biology, then design a way to make those augmentations less external. I went from wearing glasses to wearing contact lens to lasers shaving micrometers off the inside of my cornea. There's a clear progression from bulky and external to invisible and internal.

Computers also fit this bulky-to-invisible progression. We went from room-sized IBM mainframes to Dell desktops. Then from the Macbook Air to a 6" iPhone in our pocket. So, what computer comes after the iPhone?

For many people, the next logical step seems to be wearables – smart watches, glasses, and even rings. I personally doubt the next iPhone will be a wearable because of one fatal flaw: they have a limited human I/O bandwidth.

Think of human I/O bandwidth as how fast your phone can get information into your brain, and vice versa. A large 4K monitor with a mechanical keyboard and a desktop OS will always be better than a 6" screen and touch keyboard.

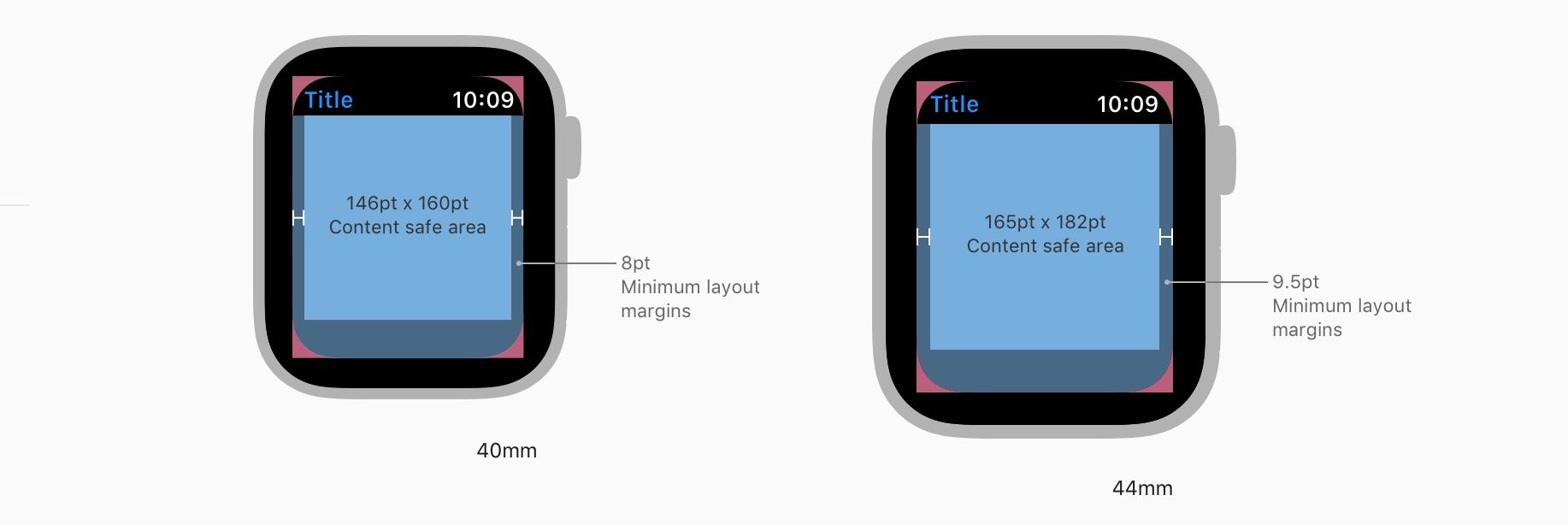

Wearables won't replace the iPhone because they make it harder to get information in and out of them. Apple Watch wearers still carry their iPhone in their pocket, except in the rare case when a phone is too cumbersome to carry like going on a run.

Apple understands the concept of human bandwidth quite well because each iPhone generation gets a slightly larger screen. It's also why most high bandwidth work like programming is done on a desktop rather than an iPhone. It's not the computational power of the phone that prevents us from doing meaningful work. The constraint is the tiny screen and touch keyboard.

For all of computing's brief history there's been a clear correlation between the size of your computer and how useful it is. Today meaningful work is done on MacOS, Windows, or Linux while everything else is done on iOS or Android. Wearables don't really make a dent in the category of meaningful work. Apple's Human Interface Guidelines for WatchOS recommend developers avoid displaying more than 2 sentences on the watch's 44mm screen.

Getting information into the brain

Our brain processes visual input 60,000x faster than text, so the next iPhone must have a graphical user interface. When you have a conversation, 70 to 93 percent of all communication is nonverbal. Our brains love to process visual data, so it'd be a waste to let most of our neurons sit idle while working. Also – imagine the horror of being forced to communicate with your computer only via Siri!

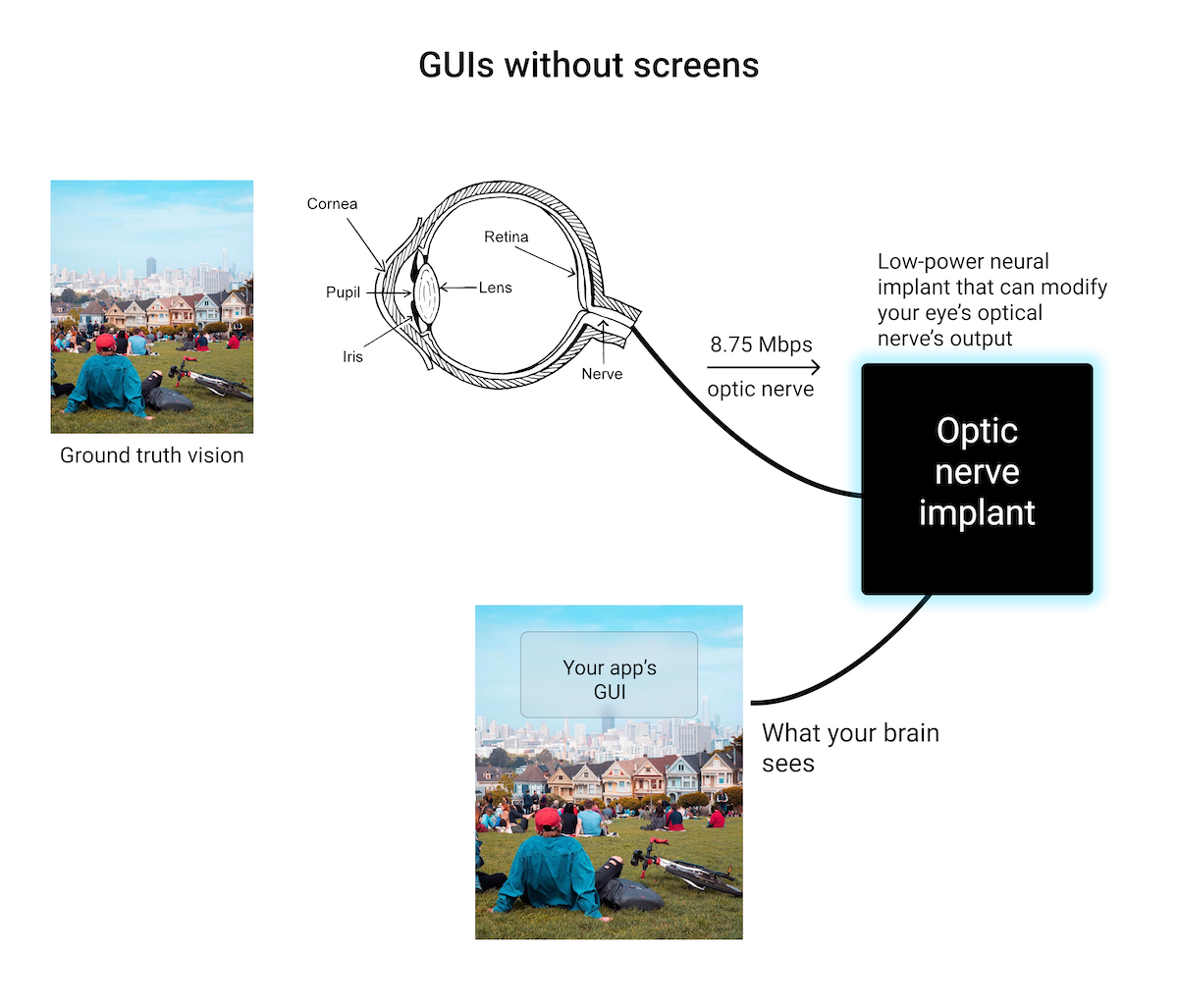

If the "next iPhone" must be capable of displaying a GUI large enough for serious work while also being significantly smaller, the only path forward is to depart from the concept a screen entirely.

The Optic Nerve Implant

The human retina generates electrical signals and sends them via the optic nerve to the brain. But, what kind of signals does it produce? The neuroengineering resesarcher Miguel Hernandez developed a neural implant that gave vision to blind patients by turning a camera's byte stream output into electrical signals the brain could understand.

If the basic idea behind Gómez’s sight—plug a camera into a video cable into the brain—is simple, the details are not. Fernandez and his team first had to figure out the camera part. What kind of signal does a human retina produce? To try to answer this question, Fernandez takes human retinas from people who have recently died, hooks the retinas up to electrodes, exposes them to light, and measures what hits the electrodes. (His lab has a close relationship with the local hospital, which sometimes calls in the middle of the night when an organ donor dies. A human retina can be kept alive for only about seven hours.) His team also uses machine learning to match the retina’s electrical output to simple visual inputs, which helps them write software to mimic the process automatically. (via MIT Technology Review)

Max Hodak, the president of Neuralink, also explains that the problem with neural implants isn't understanding the signal, but rather getting it in the first place.

Decoding the signals isn’t the hard part: getting the signals captive is. Current recording technology, put simply, sucks. Really, really badly. Current implants only pick up around 4 cells per electrode, last an average of 3 years before being encapsulated in fibrous tissue by the brain (hypothesized to be an immune response) and rendered useless. (via maxhodak.com)

Getting a reliable signal from the brain is really, really hard. But what if we could somehow get the signal before it got to the brain?

A hypothetical device – the optic nerve implant – could intercept the signals sent from the retina to the brain via the optic nerve, and render a GUI over your vision. The optic nerve's bandwidth is only about 8.75 megabits per second, and decoding the optic nerve's signals could be done using modern ML techniques as demonstrated by Hernandez's artificial eye. More importantly, the optic nerve can be accessed without touching the brain at all which would be far less invasive than an implant that directly stimulates the brain.

The optic nerve implant would be the only way to increase the human bandwidth of the next iPhone while also making it smaller and more internal. The hypothetical screen size can be as large as our field of view – and the device powering it would be millimeters in size.

We still have to solve the second part of the equation – the "in" part of I/O. The ideal input system would have as much human information bandwidth as using a mouse and keyboard while also being invisible when in use.

The first solution that jumps to mind would be to use natural language input, or some variation of it, like a speech detecting wearable that captures your silent internal speech. However, natural language is a very bad idea for computer input. It's tedious and low-bandwidth compared to a mouse and keyboard or even touchscreen. Imagine being forced to control your car by having a conversation with it, or even worse, imagine using voice to navigate a modern JS-heavy webpage!

In addition to conversational input being slow, it lacks the many benefits of GUIs. It doesn't leverage the brain's predisposition to prefer the visual over the auditory. Natural language also makes situations which require continuous control like playing a video game or moving a cursor impossible.

Another way to get input into the device would be to use gestures, kind of like how we interact while using a VR headset. Gesture-driven input is better than speech, but it's pretty tiring reaching your arms out in front of you after just a few minutes. It also doesn't align with the goal of making this device more internal to the body – imagine having to wave your hands in a crowded NYC subway during rush hour, or while trying to fall asleep in bed.

Eye-based input

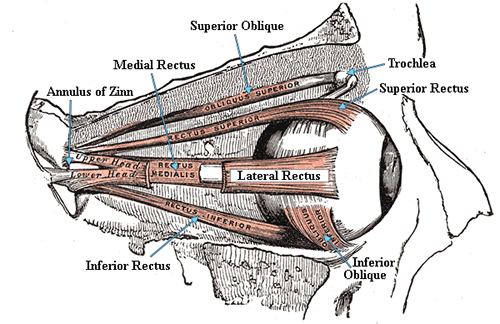

The ideal solution is to use our eyes as input. We have 6 extraocular muscles that are capable of incredibly fine-tuned movement, show no inertia when stopped suddenly, and almost never fatigue. Our eye muscles are the best way to control a cursor – possibly even more accurate than using a trackpad or mouse. The problem with eye-based input isn't that our eyes are inaccurate, but rather with eye-tracking cameras being unable to detect subtle eye movements.

In addition to rendering a GUI, the optic nerve implant can also use our gaze as a continuous input. It'd be like using a touchscreen, but instead of tapping with a finger you'd just glance at the UI element you'd want to interact with. Using the eyes for both input and output would free up our hands, so we'd be able to use a computer while doing almost anything.

Imagine if you could write code while running through the park. Or playing a competitive first person shooter that stretches across your FOV while sitting in a subway train during rush hour. You could stream your vision to your friends while on a trip to Japan. Or write a shader to make your vision look like a Pixar movie. Changing reality would become as easy as writing to your vision's framebuffer.

The signal processing techniques required need to convert our retina's electrical signals into a bytestream likely already exists today. We're also capable of manufacturing SoCs that are just millimeters in size, and the surgical techniques to access the optic nerve are minimally invasive and well-understood. The hardest part about creating this device seems to be getting a reliable signal from the optic nerve. It's likely only a matter of time before Apple's posts an opening for a neural engineer.